I've spent a few years programming something that almost nobody will notice, and most people may wonder why it took so long if it's seemingly minor. There are several reasons why this took so long. First and foremost, when I first started the licensing service, I was a complete noob with Rust… my first Rust project was the license activation API method, and I’ve completely rewritten each API method since I wrote them over a year ago.

There is another reason why this took so long. In this day and age, the answer to most questions isn't Jesus—it's money. The internet runs on money. Code that runs on the internet usually costs money. More specifically, I have to pay for every second that my code runs, multiplied by how many gigabytes of RAM I have allocated for it. I also have to pay for database reads and writes, billed by the kilobyte, rounded up.

I "finished" the licensing service's back end over a year ago, but the "default" way to make a licensing service for use with the JUCE library/framework involves an asymmetric cryptographic algorithm similar to RSA. JUCE's RSA implementation solved some problems that RSA has, but JUCE's RSA still has some of the same problems—the keys are massive, and because they are massive, RSA is slow. RSA key generation in particular is so slow, that trying to generate a 2048-bit keypair on the cloud using JUCE would result in the function timing out. When developers would try to set up a plugin with my licensing service, they would have had to upload a really long private key in an online form because I could not afford the processing time to generate keypairs securely and quickly enough. Also, with the private keys being stored in the database alongside with the public key, the database storage would exceed 1 kilobyte, and possibly 2 kilobytes, increasing the cost for each read and each write.

In addition to the financial costs of RSA, the "default" way to perform encryption with JUCE RSA was not constant-time, meaning that someone could time server responses to deduce the private key. Switching to a constant-time implementation would result in even slower code, so that was not a great option.

There are likely multiple ways to solve these problems, but the one that I found to be the most promising was to use elliptic curve cryptography (ECC). The benefits of ECC include reduced key size and improved speed, but ECC generally relies on what are known as "nothing-up-my-sleeve curves", which is another way of saying that the mathematician(s) who made the curves allegedly don't know of a mathematical "backdoor" for that particular elliptic curve, so I just had to pick one. I’m a bit indecisive, so my Rust library uses generics for the ECC usage. Thankfully, I did not have to fully implement ECC due to the availability of open source libraries, but I still wanted to implement a cryptosystem with automatically rotating private keys to reduce the window for attacks. I wanted to use IDs to correspond to private keys, similar to storing the private key in a database with a specified ID primary index; however, I wanted to do this without storing private keys in a database because it's not as secure and it takes up space, and you would have to perform a database read operation just to

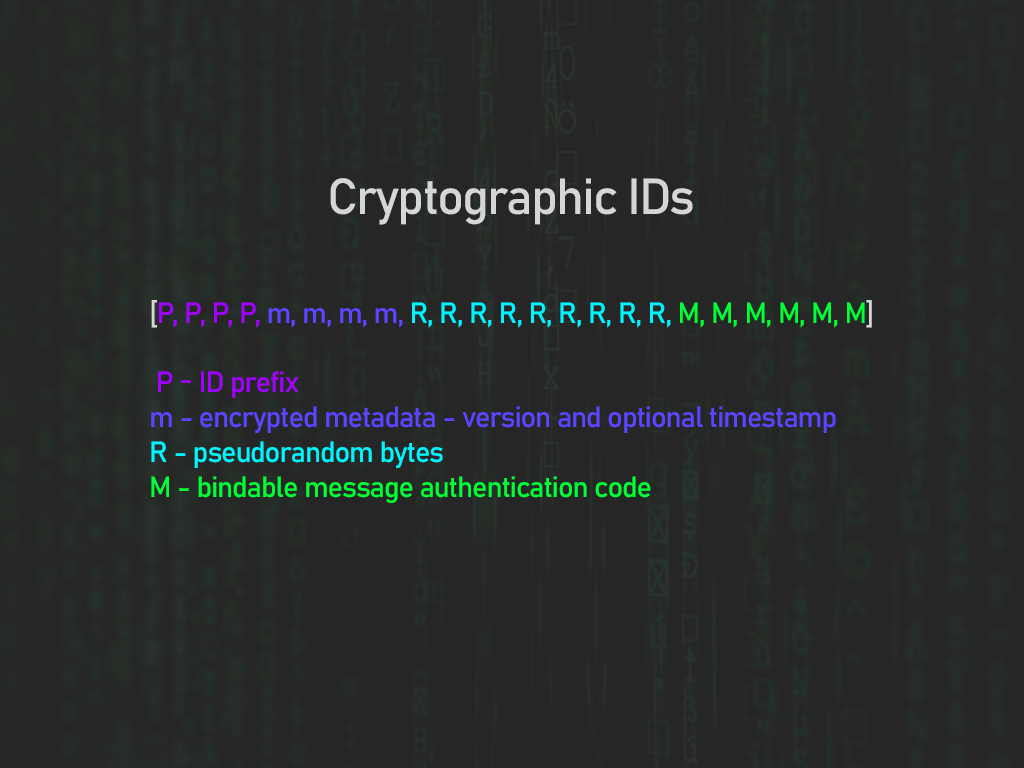

It is possible to determine the probable authenticity of a key ID by using message authentication codes (MACs). A MAC is a random-looking piece of data that is generated from a secret key and some input data that you want to authenticate. MACs can also be truncated to fit in a smaller space, at the cost of security. I chose to make an ID type that accepts an ID length and MAC length, where the MAC is at the end of the ID. There is only one possible output for each MAC, so for an N-byte MAC, there is a 1 in 28*N probability that a randomly generated value will pass the validation, but on average, it will take about 28*N - 1 attempts to pass validation. When a MAC in a client-supplied ID does not match our calculated output, we can assert that the supplied ID is inauthentic, and therefore, it will definitely not exist in our "database."

We can safely assume that a MAC-validated ID is in our "database" because using private keys does not expose the private key's value—using it only proves that we know it. In my library, private keys are used in two main ways: elliptic curve diffie hellman (ECDH) and they are used with the elliptic curve digital signature algorithm (ECDSA).

We can generate private keys from an ID by feeding the ID into a Key Derivation Function (KDF). KDFs take a key as input, along with some info parameters to generate some arbitrary private keys. KDFs can be used over and over again to reproduce the same output, making them an effective solution for generating and regenerating private keys.

KDFs are secure for generating key material because of a common principle of KDFs: changing any bit in the KDF's key and parameters will have a 1 in 2 chance of changing each individual bit of the output. So long as the underlying cryptographic primitive is free of vulnerabilities, the KDF will also be free of vulnerabilities (outside of key storage and the systems that it runs on).

If we want to make keys that are supposed to expire (such as with rotating private key IDs), we might need to embed expiration timestamps into the IDs. There are two main problems with directly embedding timestamps into IDs:

We can solve the first problem by adding a configuration for the ID's type that specifies how many bits the timestamp should be, and then right-shifting the timestamp to remove some unnecessary precision and make our timestamps take up less space. We can then add an extension of the timestamp, that I'll call a "version" that increments as a predetermined amount of time passes. Then, we can subtract the start time of each version from the timestamp to minimize space usage for the timestamp part of the ID.

The second problem can be solved with another MAC computation. First, generate a random ID, then fill 0s where the version should be, where the timestamp should be, and where the MAC should be. Compute the MAC of this slice, and then XOR the version and timestamp with the MAC, and insert the output where the version and timestamp should be. You can save a little more space by having a specific length for both the version and the timestamp, and inserting exactly as many bits as they should consume.

There are, however, some other problems with expiring IDs. By giving the developer the ability to specify how many bits are used for the timestamp and the "version," there could be an "expiration date" for the entire program. In my library... things will just break after the version counter cycles. I think it's okay because the developer is able set their program's expiration date very, very far into the future if they want to. Perhaps I need to emphasize that in the documentation.

Now we can encode timestamps into IDs, but the code is still lacking rekeying functionality. There are generally two ways to obtain a similar outcome to rekeying our cryptographic primitives: we can initialize a new instance of each primitive with a new key and/or salt, or we can generate rotating salts for the primitives to process alongside their regular inputs. The first option is a little more secure, but the second option uses fewer initializations. We’ll go with the second option to reduce initializations. This means we will need to use another cryptographic primitive to generate salts, so we can use ChaCha8Rng, a cryptographically secure pseudorandom number generator (CSPRNG). We don’t need to perform many rounds because the output and key are not exposed anywhere, so we can benefit from a little bit better performance. However, we are still facing some issues:

We can solve the first problem by keying a top-layer KDF that outputs a key for the MAC, the KDF, and also a key for ChaCha8Rng. We can now generate unique salts for each version by setting the nonce of the CSPRNG to a combination of 4-byte words: one word that represents the version, as well as a constant value that corresponds to the type of salt we're generating. Of course, after each time the CSPRNG is used, we'll also need to reset the block position back to 0.

With these version-specific salts, the KDF and MAC can process them alongside the data that they regularly process, adding an extra layer of security since the version (and therefore the salts) are changing over time.

It is possible to base an ID’s MAC or private key on another external ID by appending the external ID to the info parameter of the KDF, or by appending the external ID to the input data being fed to a MAC. Doing this allows us to relate an ID B to another ID A. We can then restrict the usage of ID B to only be used when in the presence of ID A. This requires us to use a single bit in the ID to denote whether or not it should be related to an ID, but it gives us an extra way to validate IDs, and a way to generate client-specific private keys. If two IDs are exactly the same, but they are related to two different IDs, then the resulting private keys will likely not be the same (the probability of a collision with a KDF is much smaller than the probability of a collision with an ID's truncated MAC).

We’ve got IDs that have a MAC and optionally a timestamp, and we can reproducibly turn an ID into a private key, and we can validate these IDs pretty quickly… so what can we do with this? Well, I made a protocol that is based on this ID system that does not require database reads to acquire the latest private keys. It could also be possible to generate clients’ private signing keys to reduce the minimum number of database reads required for each request down to 0, but that would be a little less secure, and it is probable that each request would need to perform a database read anyway.

Then, there are other use cases for our authenticated IDs. For my licensing service, license codes are a type of ID with an embedded MAC at the end, allowing me to know whether or not a license code is likely to be in the database. The license codes are also related to the store’s ID, adding a little “umph” to the validation process. There’s also “product IDs” that are distributed for each product/plugin, which are also based on the store’s ID. These product IDs are related to NIST-P384 signing keys so that I don’t need to store the signing key in the database and can generate it on the fly. As I said in the beginning, I would have been storing private private JUCE RSA keys, possibly would have encoded them in hexadecimal format, resulting in 1025-1027 characters being stored in the database for each product/plugin. It went from +1 KB for each product item size to +0 KB; all I have to store is the ID, which I would have had to do anyway.

If anyone wants to see the code for this, here is the cryptographic library separated into two crates: private_key_generator contains the code for the IDs and turning them into private keys, and key_manager contains the code for the protocol. There is currently only one mode for the protocol, which is a server/client kind of mode for handling API requests.

If anyone wants to see the code in action, this crypto.rs file configures the cryptographic library for use with my licensing service. function_handler_macro.rs, as the name suggests, implements a function handler that every public-facing AWS Lambda function I made uses. And finally, the licensing service's back end can be found here. The utils directory just contains the shared code, and power_tuning contains some live testing code, and the remaining directories are for AWS Lambda functions.

While I may have "finished" the licensing service early on, the back end was not proper. As I made my way through more API methods, I was learning a bit more about organization, yet I was still missing something. It wasn't until I started contributing to open source libraries that I started to get the hang of Rust. Also, I started my cryptography library before I started contributing to open source libraries, and the very first draft was pretty messy. I was manually using ChaCha20Rng as a MAC and KDF, which... it could be done properly as a cipher-based MAC and CMAC-based KDF, but I wasn't doing it by the book. I also didn't know how to use generic types until I had encountered some in open source libraries. After my little journey, I would highly recommend contributing to open source libraries as a way to learn new programming languages.

And to anyone who may be manually implementing a closed-source cryptographic protocol, I would recommend that you open source it—because if you don't, it could be because your code sucks and you know it. If you know that nobody will ever see your code, it will give you an excuse to be messy, cut corners, and misuse cryptographic primitives. I know this because my first draft was messy, I had cut corners, and I was misusing primitives. But the thing about "good" cryptography is that open sourcing a protocol should not weaken it; rather, it creates a public space for you to refine and receive feedback for your code. Speaking of feedback, if anyone would like to review my cryptography, the repo is here. Feel free to open an issue for flaws or missing features.